On Thursday, November 20, 2025, Mustafa Suleyman, CEO of Microsoft AI, dropped a quiet bombshell on X (formerly Twitter): a new experimental feature called Portraits that lets users chat with animated 2D faces while talking to Microsoft Copilot. It’s not a flashy avatar. No 3D rendering. Just a simple, lifelike portrait that blinks, shifts expression, and responds to your voice — like a digital friend sitting across from you on a screen. The twist? It’s not meant to impress. It’s meant to *feel* right.

Why a Face? Because We’re Still Humans

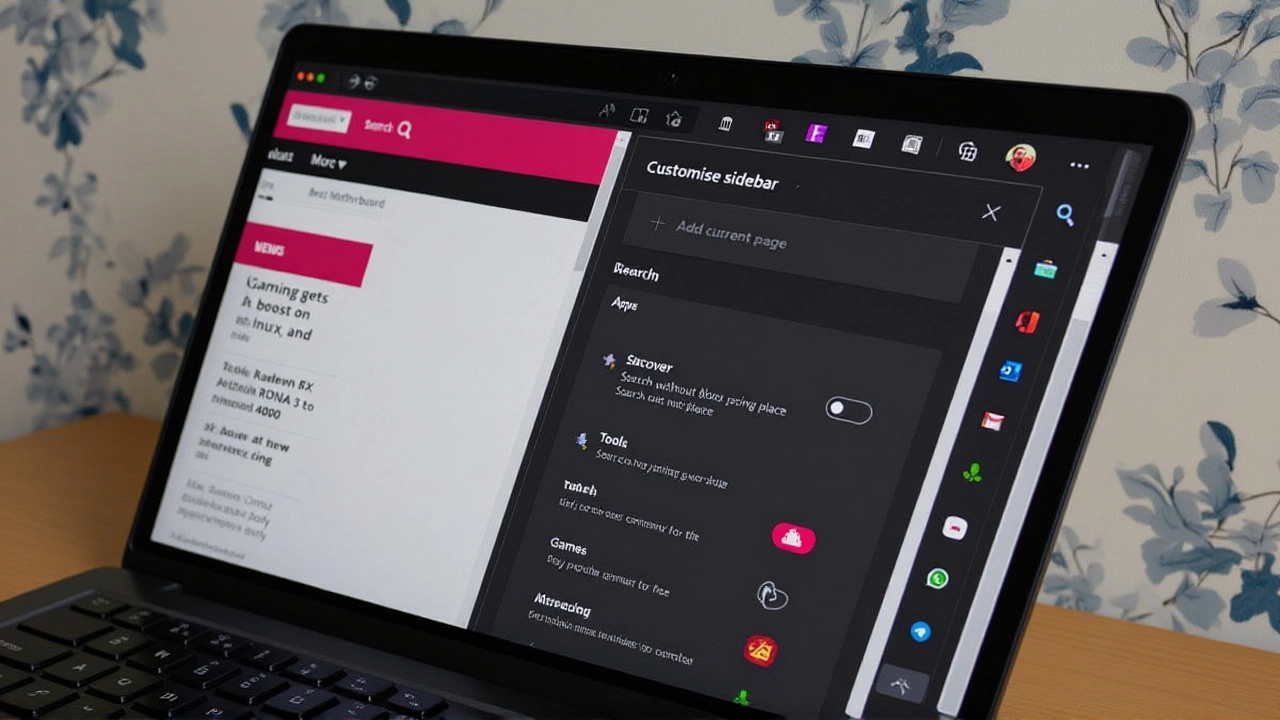

Here’s the thing: people don’t talk to Siri or Alexa like they’re machines. They whisper. They sigh. They say "please" and "thank you." That’s not because they’re polite. It’s because something in us craves a face when we’re speaking out loud. Mustafa Suleyman said it plainly: user feedback showed people "feel more comfortable talking to a face when using voice." So Microsoft built a prototype to test that instinct. Not to sell more subscriptions — though it’s locked behind Copilot Pro — but to understand if anthropomorphizing AI actually makes interactions less jarring, more natural.The feature isn’t even fully baked. It lives inside Microsoft Copilot Labs, the company’s experimental sandbox for ideas that might never ship. Think of it like Google’s Area 120 or Apple’s secretive R&D labs — except with more PowerPoint slides and fewer lab coats. Microsoft Copilot Portraits is the latest in a string of visual AI experiments, including screen-viewing Copilot Vision and image-editing tools that rolled out in August 2025.

Not the Same as 3D Avatars

Don’t confuse this with the earlier "Copilot Appearance" feature, which teased 3D animated avatars. Those were cinematic, almost like digital actors. Portraits? They’re flat. Two-dimensional. Like a photo that breathes. That’s intentional. "We didn’t want to build a character," one internal Microsoft source told Windows Central on November 20, 2025. "We wanted to build a presence. Something that’s there, but doesn’t demand attention."It’s a subtle distinction. A 3D avatar can feel like a performance. A 2D portrait feels like a glance. Like someone sitting quietly in the corner of your Zoom call, nodding along. The difference isn’t just technical — it’s psychological. And Microsoft’s betting that subtlety wins.

Part of a Bigger Shift

This isn’t an isolated experiment. It’s part of Microsoft’s "Copilot Fall Release," announced October 23, 2025, which introduced 12 new features designed to make Copilot feel "more personal, more useful and more connected to the people and world around you." That’s the phrase they keep using: human-centered AI. It’s a direct response to the cold, robotic tone of early LLMs. Now, they’re trying to make AI feel like a colleague — not a tool.Over the past year, Microsoft claims to have shipped more than 400 new features across its Copilot product line. That’s nearly one every day. Among them: Work IQ, which lets Copilot know your job, your company, even your calendar habits. Agent Mode in Word and Excel, where AI autonomously drafts emails or formats spreadsheets. And Sora 2, their new AI video generator, which can create 60-second clips from text prompts.

Portraits fits right in. It’s not about productivity. It’s about presence. About making AI feel less like an app and more like a companion — something Mustafa Suleyman admitted just a few years ago "seemed distant and uncertain." Now? "It’s real, it’s here."

Who Gets It? And When?

You won’t see Portraits unless you’re a Copilot Pro subscriber — that’s $20 a month. And even then, access is rolling out gradually. Microsoft’s doing this on purpose. They’re not launching a product. They’re running a live experiment. Some users will get it this week. Others next month. Some might never see it. The goal? To collect behavioral data: How long do people talk to the portrait? Do they smile back? Do they pause before asking hard questions? Do they feel weird?Microsoft hasn’t shared technical details. We don’t know what model powers the facial animation. Whether it’s a fine-tuned version of Phi-4, or something proprietary. We don’t know if it syncs lip movement to speech or just uses generic expressions. The lack of transparency isn’t secrecy — it’s strategy. They want to learn before they lock in a design.

What Comes Next?

No one’s saying when — or even if — Portraits will graduate from Copilot Labs. But the direction is clear: Microsoft is building an AI that doesn’t just answer questions. It *relates*. That’s the real shift. From command-response to conversation. From query to connection.If this works, expect to see portraits in Teams meetings. In Windows notifications. Maybe even in your car’s dashboard. Imagine your AI assistant looking you in the eye while telling you your flight’s delayed. Suddenly, bad news feels less cold.

But there’s a risk. Too much human-like behavior can creep into the uncanny valley. Or worse — make people emotionally dependent on a machine that doesn’t feel. Microsoft’s aware. That’s why they’re calling it a prototype. Not a product. Not yet.

Frequently Asked Questions

How does Copilot Portraits differ from other AI avatars?

Unlike 3D avatars that mimic full-body motion, Copilot Portraits uses static 2D images that animate subtly — blinking, slight head tilts, facial expressions — triggered by voice tone and timing. It’s designed to feel present without being distracting, avoiding the "uncanny valley" effect of overly realistic CGI characters. This makes it less resource-heavy and more scalable across devices.

Why is it only available to Copilot Pro subscribers?

Microsoft is using Copilot Pro as a testing ground for high-engagement, experimental features. The $20/month subscription allows them to gather detailed usage data from a committed user base before deciding whether to scale the feature. It also helps offset the computational cost of real-time facial animation and voice-response modeling.

What data does Microsoft collect from Portraits interactions?

Microsoft is tracking voice patterns, interaction duration, user reactions to facial cues, and whether users initiate conversation more frequently with Portraits than with text-only Copilot. They’re not recording audio beyond what’s needed for real-time response, but they are analyzing timing, hesitation, and emotional tone through anonymized metadata.

Could this lead to AI companions becoming emotionally manipulative?

That’s a major concern among ethicists. Microsoft claims Portraits is purely for research, with no intent to foster emotional dependency. Still, the line between comfort and manipulation is thin. Experts warn that if users begin to trust or rely on these faces for emotional support, companies could exploit that trust — especially if future versions include memory of past conversations or personalized "moods."

How does this compare to Apple’s rumored AI persona features?

Apple’s rumored "Siri Persona" project reportedly focuses on voice tone and personality customization, not visual avatars. Microsoft’s move into 2D portraits suggests a different philosophy: visual presence as a comfort trigger, not just vocal familiarity. Apple may wait to see if users respond positively before investing in visual AI — Microsoft is betting they will.

Will Copilot Portraits ever be free?

It’s unlikely in the near term. Microsoft has tied nearly all its most innovative AI features to Copilot Pro as a subscription driver. Even if Portraits becomes popular, it’s more valuable as a retention tool than a free perk. That said, if user feedback is overwhelmingly positive, Microsoft might offer a limited version in Windows 12’s free AI suite — but without the advanced expressions or voice-sync precision.